500x faster than ANTLR

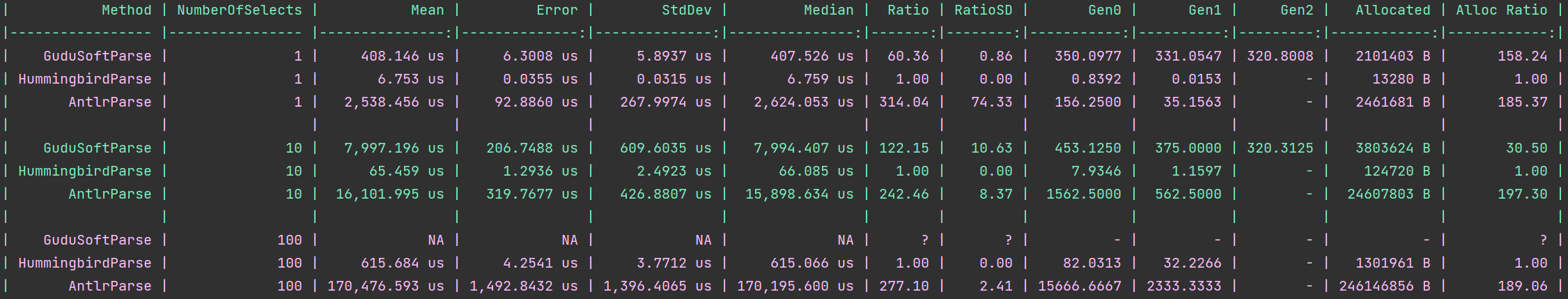

If you, by any chance, read my article from half a year ago where I have introduced our HummingBird parser, you saw that it's incredibly fast, roughly 250x-300x faster than ANTLR.

I did some relaxing and self-therapeutic coding this week, optimizing for .NET9.

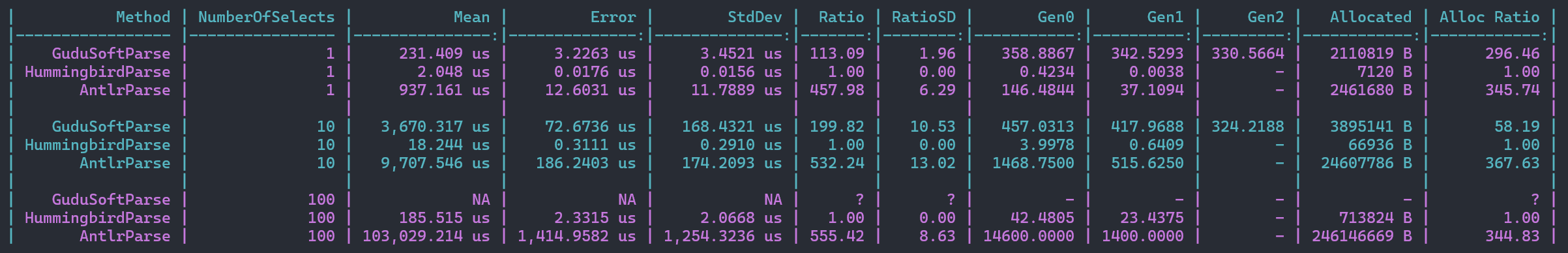

Not bad! We are now over 500x faster than ANTLR (this is a new machine so all parsers are doing better in absolute numbers). The memory consumption is halved, and that's after increasing the padding of our internal in-memory tokens from 2 to 4 bytes.

I love .NET team, they are doing fantastic work. Out of the box, each major .NET release makes our software some 20% faster just by recompiling. But there is also a new functionality I was able to take advantage of. Surprisingly, SearchValues was not appropriate for our use-case, but spans are golden. I used them somewhat before and have now increased their use.

There is only so much one can optimize in a few days of work when changes need to be applied to 4 major parser dialects at once, but there is quite a bit of headroom left. Optimization is an art more than a science as we are balancing code readability and ease of use vs performance benefits. Our parser generator produces all of the code - in fact, we have some 400k SLOC of auto-generated optimized code for the parsers we use.

I plan to come back to the optimization the next time there is a short gap in the daily obligations. We are now at 2 microseconds per query and I can't but wonder if that can be trimmed to under 1 microsecond.

I know, there is nothing rational in that desire. Who cares? It's going to be fun to try to pull that off.